Scientists from Imperial College London discovered that variation between brain cells might accelerate learning and enhance the functioning of the brain and future AI.

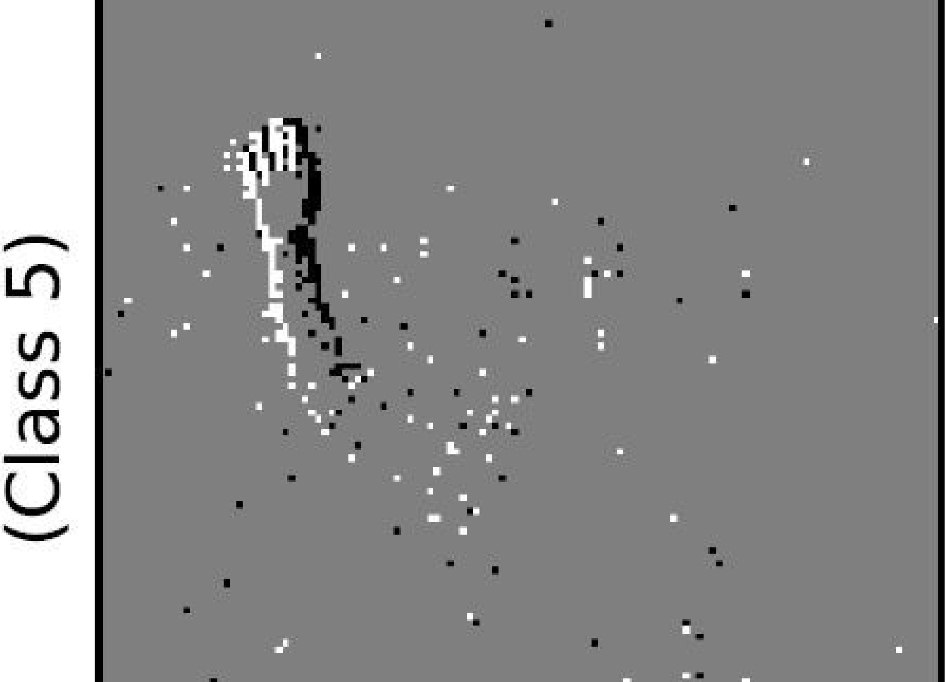

Neural networks identifying hand gestures. Image Credit: Perez-Nieves et al.

The recent research identified that by altering the electrical properties of individual cells in simulations of brain networks, the networks learned quicker than simulations with similar cells.

The researchers also identified that the networks required less of the tweaked cells to attain similar results and that the process requires less energy compared to models with similar cells.

The researchers state that their observations would enhance knowledge about why human brains are better at learning. They further add that the findings would help create enhanced artificially intelligent systems, like digital assistants that can identify faces and voices, or self-driving car technology.

The brain needs to be energy efficient while still being able to excel at solving complex tasks. Our work suggests that having a diversity of neurons in both brains and AI fulfills both these requirements and could boost learning.”

Nicolas Perez, Study First Author and PhD Student, Department of Electrical and Electronic Engineering, Imperial College London

The study was published in the Nature Communications journal.

Why is a neuron like a snowflake?

The human brain is composed of billions of cells known as neurons. The neurons are interlinked by huge “neural networks” that enable learning about the world. Neurons are similar to snowflakes—they seem identical from a distance, but on further examination, it is evident that no two are precisely alike.

However, every cell in an artificial neural network—the technology on which AI is based—is similar, with varying connectivity. AI technology is developing at greater speed, yet their neural networks do not learn swiftly or precisely like the human brain. Scientists anticipated that the absence of cell variation might be the reason.

The researchers experimented if emulating the brain by differing neural network cell properties could enhance learning in AI. They identified that cell variability enhanced their learning and decreased energy consumption.

Evolution has given us incredible brain functions—most of which we are only just beginning to understand. Our research suggests that we can learn vital lessons from our own biology to make AI work better for us.”

Dr Dan Goodman, Study Lead Author, Department of Electrical and Electronic Engineering, Imperial College London

Tweaked timing

The perform the study, the scientists concentrated on tweaking the “time constant”—how rapidly each cell determines what it wants to do on the basis of what the cells linked to it are doing. Certain cells determine swiftly observing only at what the connected cells have just done. Certain other cells are slower to react, their decision is based on what other cells have been doing for a certain time.

Upon tweaking the cells’ time constants, the researchers entrusted the network with carrying out certain standard machine learning assignments—classifying pictures of clothing and handwritten digits, recognizing spoken digits and commands, and identifying human gestures.

The observations showed that by enabling the network to merge fast and slow information, it was capable of solving tasks in more complex, real-world settings.

When the amount of variations in the simulated networks was altered, the researchers identified that the ones that played better equaled the amount of variability found in the brain. This indicates that the brain would have evolved to have the correct amount of variability for optimal learning.

We demonstrated that AI can be brought closer to how our brains work by emulating certain brain properties. However, current AI systems are far from achieving the level of energy efficiency that we find in biological systems. Next, we will look at how to reduce the energy consumption of these networks to get AI networks closer to performing as efficiently as the brain.”

Nicolas Perez, Study First Author and PhD Student, Department of Electrical and Electronic Engineering, Imperial College London

Source:

Journal reference:

Perez-Nieves, N., et al. (2021) Neural heterogeneity promotes robust learning. Nature Communications. doi.org/10.1038/s41467-021-26022-3.